Cool stuff in NIPS 2015 (workshop) - Time series

This post is about NIPS time series workshop 2015.

Table of content

- Table of content

- Invited talk by Mehryar Mohri on learning guarantee on time series prediction

- Panel discussion on modern challenges in time series analysis

Invited talk by Mehryar Mohri on learning guarantee on time series prediction

- It would be nice to have his slide. But I don’t have them here. You can have a look at the addition reading materials listed below.

- The talk is mostly about a data driven learning bound (learning guarantee) for non-stationary non-mixing stochastic process (time series).

Introduction and problem formation

- Classical learning scenario: receive a sample \((x_1,y_1),\cdots,(x_m,y_m)\) drawn i.i.d. from a unknown distribution \(D\), forecast the label \(y_0\) of an example \(x_0\), \((x_0,y_0)\in D\).

- Time series prediction problem: receive a realization of a stochastic process \((x_1,x_2,\cdots,x_T)\), forecast the next value \(X_{T+1}\) at time point \(T+1\).

- Two standard assumptions in current time series prediction models

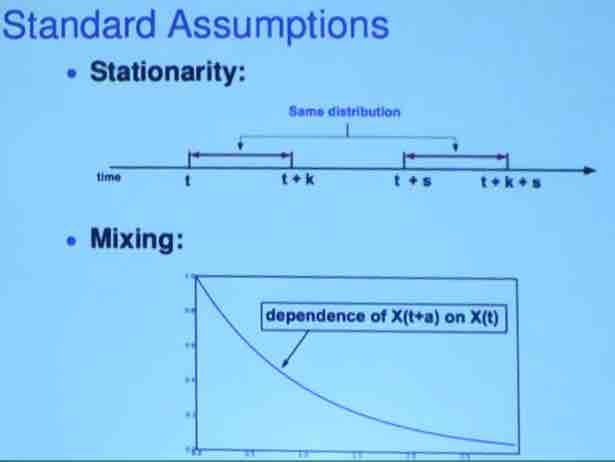

- Stationary assumption: samples for a fixed sliding window (time period) follow the same distribution.

- Mixing assumption: dependency between samples of two time points decays fast along time.

- These two assumptions seem to be natural and widely adopted in the machine learning community for time series prediction. check the video for a collection of literatures supporting this argument.

- However, these two assumptions are somehow wrong:

- Consider only the distribution of the samples, ignore the learning problem.

- These two assumptions are not testable.

- Often they are not valid assumptions.

- Examples:

- Long memory processes might not be mixing, autoregressive integrated moving average (ARIMA), autoregressive moving average (ARMA)

- Markov process are not stationary

- Most stochastic process with a trend, a periodic component, or seasonal pattern are not stationary.

- Now the question is whether it is possible to learn these time series or stochastic processes.

Model

- Robust learning models which do not make these strong/wrong assumptions are required for time series learning problems.

- What is a suitable loss function for time series prediction?

- Not generalization error

- \(R(h) = \underset{x}{E}[L(h,x)]\).

- Generalization error captures the loss of a hypothesis \(h\) over a collection of unseen data.

- Introduce pass dependent loss

- \(R(h,x^T) = \underset{x_{T+s}}{E} [L(h,x_{T+s}) \| x^T]\).

- Seek for a hypotheses that has the smallest expected loss in the near future condition on the current observations.

- Not generalization error

- How to analyze pass dependency loss function with the only assumption that can be potentially valid. Two ingredients:

- Expected sequential covering numbers

- Discrepancy measure:

- \[\Delta = \underset{h\in H}{sup}\left|R(h,X^T)-\frac{1}{T}\sum_{t=1}^{T}R(h,X^{t-1})\right|\]

- Maximum possible difference between generalization error in the near future and the generalization error we actually observed. Measure the non-stationarity.

- Discrepancy is difficult to capture. An estimation is used.

- Propose a learning guarantees/bound for time series prediction.

- \[R(h,x^T) \le \frac{1}{T}\sum_{t=1}^{T}L(h,x_t) + \Delta + \sqrt{\frac{8log\frac{N}{delta}}{T}}\]

- This bound is essentially the same as classical learning bound based on i.i.d assumption except for an additional term describing the discrepancy.

- Minimize the error bound - convex optimization problem.

Additional reading materials

- The following two papers published in NIPS conference this year might be worth checking out

- There is also a talk in NIPS 2015 conference about the time series prediction presented by Vitaly Kuznetsov joint work with Mehryar Mohri link to the video.

Panel discussion on modern challenges in time series analysis

- Algorithm that is able to learn from other time series, something like transductive learning.

- Algorithm for time series prediction in the case where there are only a few data points.

- Time series prediction also considering other factors. For example, shopping history of a particular user enables the prediction of purchase of the next time point. How about we observe/predict at the same time this particular user is expecting a baby. Add other contextual information into time series prediction.

- Time series prediction in high frequency trading, frequency increase from a millisecond to a nanosecond.

- Heterogeneous data sources, fusion of a variety of time series data.

- Algorithm to tackle large scale time series data or very small time series data.

- Time series model in finance

- Finance modeling and high frequency trading.

- Different stock trade centre use different option matching mechanism.

- Structural heterogeneity in the financial data should be addressed to understand the price, volume, etc of stocks in the exchange market.

- Output a confident interval for prediction is very important in developing machine leaning models by Cortes.

- Why deep learning is not used in financial data by Cortes

- It is difficult to update a deep learning model vs. financial data is essential online.

- Financial data is very noisy, nonlinear model will overfit the training data. On the other hand, linear model with regularization seems to be a better alternative.